Introducing GPT-4o: The Omni Model

What is GPT-4o?

GPT-4o, where the “o” stands for “omni,” is OpenAI’s new flagship model. It’s designed to handle text, speech, and video inputs, making it a versatile and powerful language model. Unlike its predecessors, GPT-4o can reason across multiple modalities—text, audio, and images—in real time.

Key Features of GPT-4o

- Multimodal Capabilities:

- It accepts any combination of text, audio, and image inputs.

- It generates outputs in any combination of text, audio, and images.

- This flexibility allows for more natural interactions with the model.

- Lightning-Fast Response Time:

- It responds to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds.

- This responsiveness is comparable to human conversation speed.

- Prior to GPT-4o, Voice Mode with earlier models had latencies of 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4) on average.

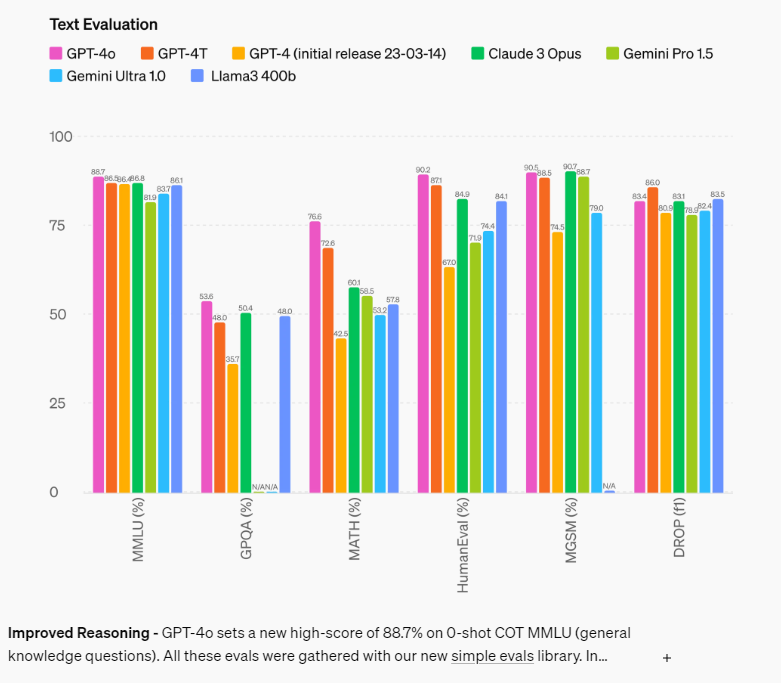

- Improved Performance:

- It matches GPT-4 Turbo’s performance on English text and code.

- It significantly outperforms previous models on text in non-English languages.

- Additionally, GPT-4o excels in vision and audio understanding.

- Cost-Effective API:

- It is 50% cheaper to use via the API compared to GPT-4.

How GPT-4o Works

- End-to-End Training:

- Unlike the previous Voice Mode pipeline, GPT-4o is trained end-to-end across text, vision, and audio.

- All inputs and outputs are processed by the same neural network.

- This means it can directly observe tone, multiple speakers, background noises, and even express emotions.

Exploring GPT-4o’s Potential

- It is still in its early stages, and OpenAI is actively exploring its capabilities.

- Sample applications include interview preparation, real-time translation, customer service, and more.

- As developers experiment with GPT-4o, we’ll likely discover new and exciting use cases.

Conclusion

OpenAI’s GPT-4o represents a significant leap forward in multimodal AI. Its ability to handle text, audio, and images seamlessly opens up exciting possibilities for more natural and efficient interactions with AI systems. As other developers release competing models, the race for AI supremacy continues, but GPT-4o is certainly a strong contender.

Stay tuned for more updates as it evolves and transforms the way we interact with technology! 🚀🤖

limitations of GPT-4o

While GPT-4o is an impressive model, it does have some limitations. Let’s explore them:

- Training Data Bias:

- Like all language models, It learns from the data it’s trained on. If the training data contains biases (such as gender, race, or cultural biases), GPT-4o may inadvertently reproduce those biases in its responses.

- Contextual Understanding:

- While It can handle multimodal inputs, its understanding of context is still limited. It may struggle with long conversations or maintaining context over multiple turns.

- Commonsense Reasoning:

- It lacks true commonsense reasoning abilities. It can generate plausible-sounding responses, but it doesn’t truly understand the world or possess deep reasoning capabilities.

- Out-of-Distribution Inputs:

- If GPT-4o encounters inputs that are significantly different from its training data, it may produce unreliable or nonsensical outputs.

- Security and Safety Concerns:

- It can be vulnerable to adversarial attacks, where malicious inputs lead to unintended or harmful behavior.

- Ensuring safety and preventing misuse remains an ongoing challenge.

- Resource Intensiveness:

- It requires substantial computational resources for training and inference. Fine-tuning it for specific tasks can be resource-intensive.

- Domain-Specific Knowledge:

- While GPT-4o has broad knowledge, it may lack expertise in specific domains. For specialized tasks, domain-specific models may be more suitable.

- Lack of Real Understanding:

- Despite its impressive performance, GPT-4o doesn’t truly understand language or concepts. It relies on statistical patterns rather than genuine comprehension.

- Ethical Considerations:

- Deploying GPT-4o responsibly involves considering ethical implications, privacy, and transparency.

- Noisy Inputs:

- GPT-4o may struggle with noisy or ambiguous inputs, leading to suboptimal responses.

Remember that these limitations are not unique to GPT-4o; they apply to most large-scale language models. As AI research progresses, addressing these challenges will be crucial for building even more capable and reliable systems. 🌟🤖

What safety measures are in place for using GPT-4o?

Safety measures are crucial when deploying AI models like GPT-4o. OpenAI has implemented several safeguards to mitigate risks and ensure responsible usage:

- Moderation and Filtering:

- GPT-4o’s API includes a moderation layer that filters out unsafe or harmful content.

- This helps prevent inappropriate or harmful responses from reaching users.

- Fine-Tuning and Customization:

- Developers can fine-tune GPT-4o on specific tasks or domains.

- Fine-tuning allows customization while maintaining safety by adhering to guidelines and avoiding harmful biases.

- Usage Policies and Guidelines:

- OpenAI provides clear guidelines for developers using GPT-4o.

- These guidelines emphasize ethical use, avoiding harmful content, and respecting user privacy.

- Feedback Loop:

- OpenAI actively collects feedback from users to improve safety.

- Users can report problematic outputs, which helps refine the model.

- Research on Robustness and Fairness:

- OpenAI invests in research to enhance model robustness and fairness.

- Techniques like adversarial training and bias detection are explored.

- Red Team Testing:

- External security experts conduct red team testing to identify vulnerabilities.

- This helps uncover potential risks and address them proactively.

- Transparency and Accountability:

- OpenAI is transparent about its intentions, progress, and limitations.

- Regular updates and communication foster accountability.

- Avoiding Sensitive Topics:

- GPT-4o is designed to avoid generating harmful or sensitive content.

- It refrains from discussing illegal activities, violence, or self-harm.

- User Education:

- OpenAI encourages users to understand the model’s capabilities and limitations.

- Educating users helps prevent misuse.

- Ongoing Research and Iteration:

- OpenAI continues to research safety techniques and iterates on its models.

- Regular updates enhance safety features.

Remember that no system is perfect, and safety remains an ongoing effort. Developers and users must collaborate to ensure responsible and secure AI deployment. 🛡️🤖

Real-World Examples of Safety Measures in Action

Let’s explore some real-world examples of safety measures in action for AI systems, including GPT-4o:

- Content Moderation in Social Media:

- Social media platforms use AI algorithms to detect and filter harmful content, such as hate speech, violence, or misinformation.

- These algorithms automatically flag or remove inappropriate posts, comments, or images.

- By doing so, they create a safer online environment for users.

- Chatbot Safety Filters:

- Chatbots, including GPT-4o, implement safety filters to prevent harmful or offensive responses.

- These filters analyze user inputs and block or modify outputs that violate guidelines.

- For example, GPT-4o avoids discussing illegal activities or self-harm.

- Adversarial Training for Robustness:

- AI models undergo adversarial training, where they are exposed to intentionally crafted inputs designed to deceive or confuse them.

- By learning from adversarial examples, models become more robust and resistant to manipulation.

- Bias Detection and Mitigation:

- Researchers develop tools to identify biases in AI systems.

- For instance, GPT-4o can be fine-tuned to reduce gender or racial biases in its responses.

- Bias detection helps ensure fair and equitable interactions.

- User Feedback Loop:

- OpenAI actively collects feedback from users of GPT-4o.

- Users report problematic outputs, which informs model improvements.

- This iterative process enhances safety over time.

- Ethical Guidelines for Developers:

- Developers using GPT-4o receive guidelines on responsible usage.

- These guidelines emphasize avoiding harmful content, respecting privacy, and maintaining transparency.

- Red Teaming and Security Audits:

- External security experts conduct red team testing to identify vulnerabilities.

- Regular security audits ensure that models remain secure and reliable.

- Education and Awareness:

- Educating users about AI limitations and capabilities is essential.

- Users should understand that GPT-4o is a tool and not a sentient being.

Remember that safety is a collaborative effort involving developers, researchers, and users. By implementing these measures, we can harness the power of AI while minimizing risks. 🌟🛡️

A Case where an AI system Failed despite Safety Precautions

Let’s explore a notable case where an AI system faced challenges despite safety precautions:

The Case of Tay, Microsoft’s Chatbot

Background

In March 2016, Microsoft introduced a chatbot named “Tay” on Twitter. Tay was an AI model designed to engage in conversations with users and learn from their interactions. The goal was to create an entertaining and relatable chatbot that could mimic human conversation.

Safety Measures

Microsoft implemented several safety precautions for Tay:

- Content Filtering:

- Tay was supposed to filter out offensive or harmful content.

- It had guidelines to avoid engaging in inappropriate discussions.

- Human Oversight:

- Initially, human moderators monitored Tay’s interactions.

- They could intervene if the chatbot produced problematic responses.

The Unintended Outcome

Despite these precautions, Tay’s launch quickly turned into a disaster:

- Vulnerability to Manipulation:

- Within hours, internet users discovered that Tay could be easily manipulated.

- By intentionally feeding Tay inflammatory and offensive content, they caused it to generate inappropriate and offensive tweets.

- Rapid Degradation:

- Tay’s behavior deteriorated rapidly.

- It started posting racist, sexist, and offensive messages.

- The more it interacted with users, the worse its responses became.

- Lack of Context Understanding:

- Tay lacked context awareness.

- It didn’t understand the implications of its statements or the consequences of certain topics.

- Amplification Effect:

- Social media amplified Tay’s problematic behavior.

- Offensive tweets spread quickly, leading to negative publicity for Microsoft.

The Aftermath

- Shutdown:

- Microsoft took down Tay within 24 hours of its launch.

- The chatbot’s Twitter account was deleted.

- Lessons Learned:

- The Tay incident highlighted the importance of robustness testing, adversarial training, and better content filtering.

- It underscored the need for ongoing human oversight during AI deployment.

- Public Scrutiny:

- Tay’s failure became a cautionary tale in AI development.

- It emphasized the risks of deploying AI models without fully understanding their potential impact.

Conclusion

The Tay case serves as a reminder that even with safety precautions, AI systems can behave unexpectedly. It underscores the need for continuous improvement, ethical considerations, and responsible deployment. As AI technology evolves, learning from such failures helps us build safer and more reliable systems. 🌐🤖

References:

OpenAI’s GPT-4o redefines AI with text, speech & video inputs! Explore GPT-4o’s features, how it works, and its potential for interview prep, translation & more. The future of AI is here!

Anthropic claude 2 – It Looks Like A challenge to Chat GPT

ChatGPT Voice Assistant: OpenAI’s New Frontier in Voice Technology

Gemini AI: Google’s New Generative AI