H100: Nvidia's Amazing New GPU Priced at $40,000

H100: Nvidia’s Amazing New GPU Priced at $40,000

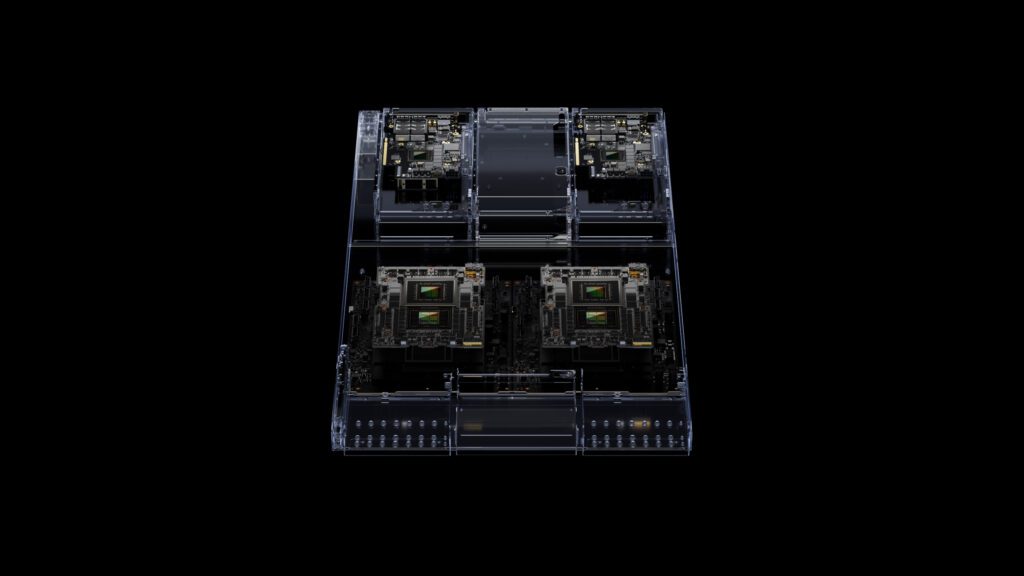

It is a new generation of data center GPU that is designed to accelerate a wide range of AI and high-performance computing (HPC) workloads. It is based on the Hopper architecture, which is Nvidia’s most advanced GPU architecture to date.

It has a number of features that make it stand out from other GPUs. These include:

- A new Transformer Engine that is specifically designed for natural language processing (NLP) tasks.

- A new Tensor Core that is twice as fast as the previous generation.

- A new SwitchML fabric that allows for more efficient communication between the GPU cores.

- A new PCIe Gen5 interface that provides up to 16 GB/s of bandwidth.

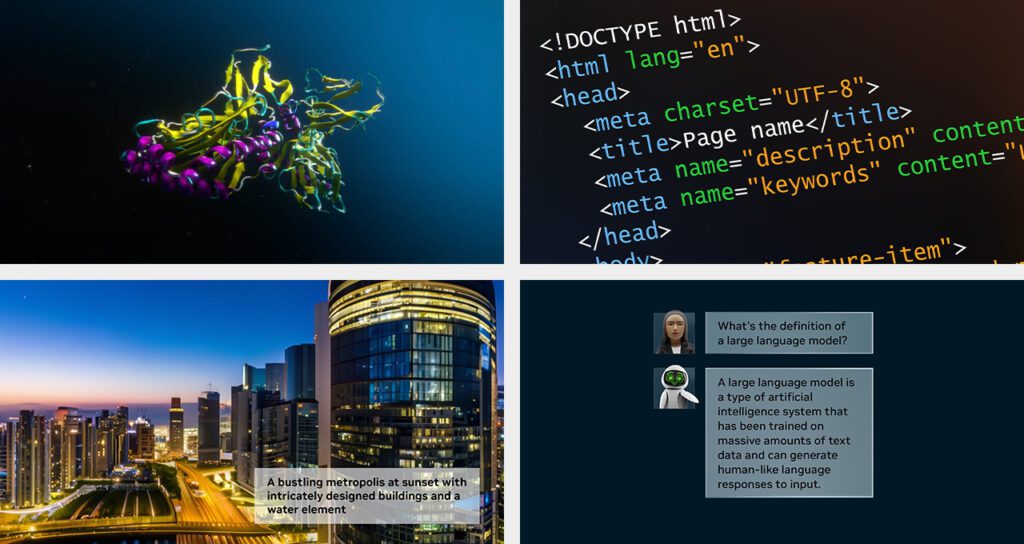

The H100 is expected to be used in a wide range of applications, including:

- Natural language processing

- Image recognition

- Speech recognition

- Drug discovery

- Financial modeling

- Weather forecasting

- Self-driving cars

It is a significant step forward in the development of GPU technology. It is the most powerful GPU ever made and is expected to revolutionize the way AI and HPC workloads are performed.

Here are some specific examples of how the H100 is being used:

- Google is using the H100 to train its Transformer-XL language model, which is one of the largest and most powerful language models in the world.

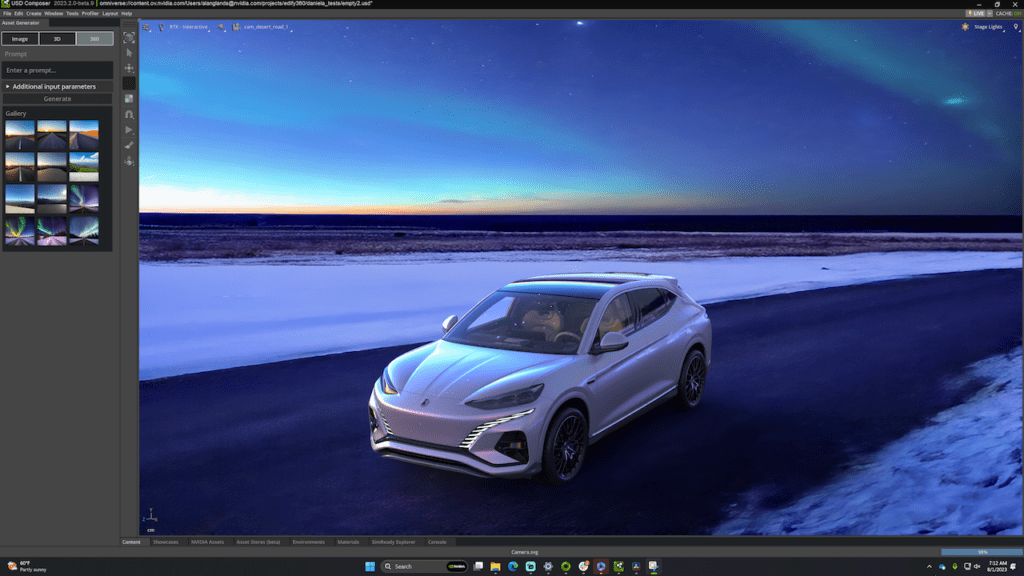

- NVIDIA is using the H100 to accelerate its Omniverse platform, which is a real-time simulation and collaboration platform for 3D design and engineering.

- Cerebras Systems is using the H100 to power its Wafer-Scale Engine, which is the world’s largest AI accelerator.

The H100 is still in its early stages of development, but it has the potential to revolutionize the way we interact with computers and the world around us.

Nvidia on H100

Here is what Nvidia says on its H100 GPU

NVIDIA H100 Tensor Core GPUs and NVIDIA Quantum-2 InfiniBand networking are now available on Microsoft Azure. This new instance allows users to scale generative AI, HPC, and other applications with a click of a button.

The NVIDIA H100 GPU is a supercomputing-class GPU that delivers high performance through architectural innovations, including fourth-generation Tensor Cores, a new Transformer Engine for accelerating LLMs, and the latest NVLink technology.

The NVIDIA Quantum-2 CX7 InfiniBand with 3,200 Gbps cross-node bandwidth ensures seamless performance across the GPUs at massive scale.

The ND H100 v5 VMs are ideal for training and running inference for increasingly complex LLMs and computer vision models. These neural networks drive the most demanding and compute-intensive generative AI applications, including question answering, code generation, audio, video and image generation, speech recognition, and more.

The ND H100 v5 VMs achieve up to 2x speedup in LLMs like the BLOOM 175B model for inference versus previous generation instances.

NVIDIA H100 Tensor Core GPUs on Azure provide enterprises with the performance, versatility, and scale to supercharge their AI training and inference workloads. The combination streamlines the development and deployment of production AI with the NVIDIA AI Enterprise software suite integrated with Azure Machine Learning for MLOps, and delivers record-setting AI performance in industry-standard MLPerf benchmarks.

In addition, by connecting the NVIDIA Omniverse platform to Azure, NVIDIA and Microsoft are providing hundreds of millions of Microsoft enterprise users with access to powerful industrial digitalization and AI supercomputing resources.

Here is a summary of the key capabilities of the NVIDIA H100 GPU:

- Supercomputing-class performance

- Fourth-generation Tensor Cores

- New Transformer Engine for accelerating LLMs

- Latest NVLink technology

- NVIDIA Quantum-2 CX7 InfiniBand with 3,200 Gbps cross-node bandwidth

These capabilities make the NVIDIA H100 GPU ideal for a wide range of generative AI, HPC, and other applications.

Nvidia Applications

Images Courtesy Nvidia Press Kit.

Nvidia H100 Price

It is currently priced around $40,000. The exact price may vary depending on the retailer and the configuration of the GPU. For example, the H100 with 80GB of HBM3 memory is priced at $30,602.99 on CDW.com.

The high price of the H100 is due to its advanced features and performance. It is the most powerful GPU ever made and is designed for demanding AI and HPC workloads. The H100 is also in high demand, which is driving up the price.

If you are looking for a GPU for gaming or other less demanding tasks, there are other options available that are much more affordable. However, if you need the absolute best performance for AI or HPC workloads, then the Nvidia H100 is the only option.

Here are some other prices for the Nvidia H100 from different retailers:

- Amazon: $39,995

- Newegg: $38,999

- B&H Photo: $36,999

Nvidia Valuation close to the Mammoth Trillion Dollar Mark