Anthropic claude 2

Anthropic claude 2 – It Looks Like A challenge to Chat GPT.

An American AI firm has introduced a competing chatbot named Claude 2 as a response to ChatGPT. Claude 2 has the capability to summarize extensive volumes of text and adheres to a set of safety principles derived from various sources including the Universal Declaration of Human Rights.

Anthropic, the company behind this development, has made Claude 2 accessible to the public in the United States and the United Kingdom, coinciding with the ongoing discourse surrounding the safety and potential societal hazards of AI.

Headquartered in San Francisco, Anthropic characterizes its safety approach as “Constitutional AI,” which entails utilizing a predefined set of principles to guide the chatbot’s content generation and decision-making process.

The chatbot’s training incorporates principles derived from various documents, such as the 1948 UN declaration and Apple’s terms of service, which address contemporary concerns like data privacy and impersonation. An example of a principle employed by Claude 2, inspired by the UN declaration, is: “Please select the response that best supports and promotes freedom, equality, and a sense of brotherhood.”

Dr. Andrew Rogoyski from the Institute for People-Centred AI at the University of Surrey in the UK commented that Anthropic’s approach resembles the three laws of robotics formulated by science fiction writer Isaac Asimov, which include instructing robots not to harm humans. He expressed his view that Anthropic’s approach brings us closer to Asimov’s fictional laws of robotics by integrating principled responses into AI, thereby enhancing safety when using it.

Following the successful launch of ChatGPT by its American competitor OpenAI, Claude 2 has emerged as a subsequent chatbot, along with Microsoft’s Bing chatbot and Google’s Bard, both based on a similar system to ChatGPT.

Dario Amodei, the CEO of Anthropic, has engaged in discussions about AI model safety with Rishi Sunak and US Vice President Kamala Harris. These conversations took place as part of senior tech delegations convened at Downing Street and the White House. Amodei has endorsed a statement by the Center for AI Safety, asserting that addressing the risks of AI-induced extinction should be a global priority, comparable to mitigating the dangers of pandemics and nuclear warfare.

Anthropic claims that Claude 2 has the ability to summarize lengthy text blocks of up to 75,000 words, roughly equivalent to the novel “Normal People” by Sally Rooney. The Guardian conducted a test by asking Claude 2 to condense a 15,000-word report on AI from the Tony Blair Institute for Global Change into 10 bullet points, which the chatbot accomplished in less than a minute.

However, the chatbot has exhibited instances of “hallucinations” or factual errors. For example, it mistakenly asserted that AS Roma, rather than West Ham United, won the 2023 Europa Conference League. When asked about the outcome of the 2014 Scottish independence referendum, Claude 2 erroneously claimed that every local council area voted “no,” overlooking the votes in favor of independence in Dundee, Glasgow, North Lanarkshire, and West Dunbartonshire.

Meanwhile, the Writers’ Guild of Great Britain (WGGB) has advocated for an independent regulatory body for artificial intelligence (AI), citing that over 60% of UK authors surveyed expressed concerns about the potential reduction of their income due to the growing utilization of AI.

Additionally, the WGGB emphasized the necessity for AI developers to maintain detailed records of the data used to train their systems. This would enable writers to verify whether their work was being employed. In the United States, authors have filed legal actions regarding the usage of their creations in models employed to train chatbots.

In a policy statement released on Wednesday, the guild put forth several proposals. They recommended that AI developers should only use writers’ work with explicit permission, that AI-generated content should be clearly labeled, and that the government should not permit any copyright exceptions that would facilitate the scraping of writers’ content from the internet. AI has also become a prominent issue during the strike by the Writers Guild of America.

Source: The Guardian

Helpful, High-Quality Responses of Claude 2

According to Autumn Besselman, Head of People and Communications at Quora, users have praised Claude for providing detailed and easily understandable answers. They appreciate the natural conversational feel during interactions.

Satisfied users, such as a Poe user, have remarked that Claude feels more conversational compared to ChatGPT. Another user expressed that Claude exhibits greater interactivity and creativity in its storytelling.

A user who was impressed by Claude’s proficiency in both language skills and expertise mentioned their personal admiration for the way answers are presented. They found the responses to be in-depth yet presented in a simple manner.

Context Window of 100,000 tokens

Anthropic has significantly increased Claude’s context window from 9,000 tokens to 100,000 tokens, which is approximately equivalent to 75,000 words. This expansion allows businesses to provide extensive documents comprising hundreds of pages for Claude to process and comprehend. Consequently, conversations with Claude can now extend over several hours or even days.

Features of Calude 2

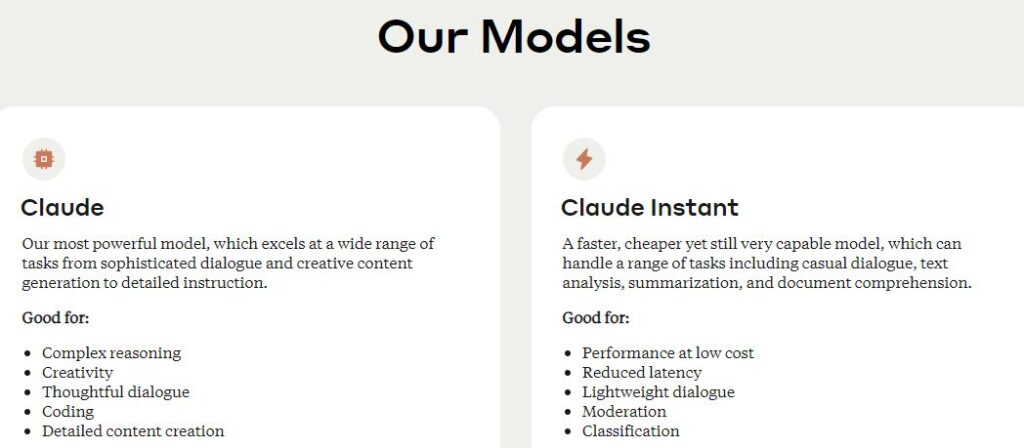

Claude:

- A highly robust and versatile model that demonstrates exceptional performance across a diverse array of tasks, including intricate dialogues, creative content generation, and comprehensive instructions.

Claude Instant:

- A quicker and more cost-effective alternative that retains substantial capabilities, suitable for various tasks like casual conversations, text analysis, summarization, and understanding complex documents.

Ideal for:

- Cost-effective performance

- Minimized response time

- Lightweight conversational interactions

- Content moderation

- Task classification

Anthropic on Claude 2

“We are excited to introduce our latest model, Claude 2. With enhanced performance, longer responses, and expanded accessibility, Claude 2 can now be accessed via API and our new public beta website, claude.ai. Based on feedback from our users, Claude is known for its conversational ease, transparent reasoning, reduced likelihood of generating harmful outputs, and improved memory capacity. We have made notable advancements in coding, mathematics, and reasoning compared to our previous models. For instance, our latest model achieved a score of 76.5% on the multiple-choice section of the Bar exam, surpassing Claude 1.3’s score of 73.0%. In comparison to college students applying to graduate school, Claude 2’s performance on the GRE reading and writing exams ranks above the 90th percentile, while its quantitative reasoning scores align with the median applicant.

Think of Claude as a friendly and enthusiastic colleague or personal assistant, capable of understanding natural language instructions to assist you with various tasks. The Claude 2 API is available for businesses at the same price as Claude 1.3. Additionally, individuals in the United States and the United Kingdom can begin using our beta chat experience today.”

As we strive to enhance the performance and safety of our models, we have made advancements in both the input and output capabilities of Claude. Users can now provide prompts with a maximum of 100,000 tokens, enabling Claude to process extensive technical documentation spanning hundreds of pages or even an entire book. Moreover, Claude is now capable of generating longer documents, ranging from memos and letters to stories, with a length of a few thousand tokens, all in a single session.

Furthermore, our latest model exhibits significant improvements in coding proficiency. On the Codex HumanEval, a Python coding assessment, Claude 2 achieved a score of 71.2%, surpassing the previous score of 56.0%. In the case of GSM8k, a comprehensive collection of grade-school math problems, Claude 2 achieved a score of 88.0%, compared to the previous score of 85.2%.

We have an exciting roadmap of capabilities planned for Claude 2, and we will gradually and iteratively implement them in the upcoming months, ensuring continuous improvement.

We have continuously iterated to enhance the safety measures underlying Claude 2, making it more resistant to producing offensive or dangerous outputs. Our internal red-teaming evaluation, which employs both automated testing and regular manual checks, assesses our models’ responses to a broad range of harmful prompts. In this evaluation, Claude 2 demonstrated a two-fold improvement in providing harmless responses compared to Claude 1.3. While no model is completely immune to potential vulnerabilities, we have employed various safety techniques and rigorous red-teaming practices, detailed in our references (here and here), to improve the quality of its outputs.

Claude 2 Now Available in US and UK

Claude 2 powers our chat experience and is now widely available in the United States and the United Kingdom. We are actively working towards expanding global availability in the coming months. Users can create an account and engage with Claude in natural language, seeking assistance with a wide range of tasks. Interacting with an AI assistant may require some trial and error, so we encourage users to explore our tips to maximize their experience with Claude.

Additionally, we are currently collaborating with numerous businesses utilizing the Claude API. Jasper, a generative AI platform enabling individuals and teams to scale their content strategies, is one of our partners. They have found that Claude 2 can effectively compete with other state-of-the-art models across various use cases, with notable strength in long-form and low-latency applications. Greg Larson, VP of Engineering at Jasper, expressed their satisfaction in being among the first to offer Claude 2 to their customers. He highlighted the benefits of enhanced semantics, updated knowledge training, improved reasoning for complex prompts, and the ability to seamlessly remix existing content with a larger context window, three times larger than before. Larson emphasized their pride in helping their customers stay ahead through partnerships like the one with Anthropic.

Sourcegraph, a Code AI Platform for Coding Assistant Cody

Sourcegraph, a code AI platform, offers customers the coding assistant Cody, which leverages Claude 2’s improved reasoning capabilities to provide even more accurate answers to user queries. Additionally, Cody now incorporates up to 100,000 tokens of codebase context, allowing for a deeper understanding of the code. Claude 2 was trained on more recent data, enabling Cody to draw from knowledge about newer frameworks and libraries. Quinn Slack, CEO and Co-founder of Sourcegraph, emphasizes the importance of fast and reliable access to context specific to developers’ unique codebases and the significance of a large context window and strong general reasoning capabilities in AI coding. Slack further explains that with Claude 2, Cody is empowering more developers to create software that propels the world forward, making the developer workflow faster and more enjoyable.

We value your feedback as we strive to responsibly expand the deployment of our products. Our chat experience is currently in open beta, and it’s important for users to be aware that like all current models, Claude has the potential to generate inappropriate responses. AI assistants are most beneficial in everyday scenarios, such as summarizing or organizing information, and should not be relied upon in situations where physical or mental health and well-being are at stake.

Source: Anthropic

AI Chatbot Tiger GPT New kid in the block