Blackwell Delivers: A Quantum Leap in AI Performance

Nvidia’s Next-Gen AI Chip “Blackwell”

Introduction

Get ready for a revolution in artificial intelligence. Nvidia, the leader in AI chip development, has unveiled its highly anticipated next-generation processor, codenamed Blackwell. Named after the renowned mathematician David Blackwell, the first Black scholar inducted into the National Academy of Sciences, Blackwell promises to be a game-changer for AI applications. This blog post dives deep into the details of Blackwell, its impressive capabilities, and how it compares to other AI chips on the market.

Blackwell: The “Woodstock of AI” Delivers

Every year, Nvidia’s GPU Technology Conference (GTC) generates excitement in the AI community. This year was no different. During GTC, Nvidia CEO Jensen Huang introduced Blackwell, a chip designed to outperform its predecessors in handling complex AI models and tasks.

Blackwell’s Groundbreaking Specs

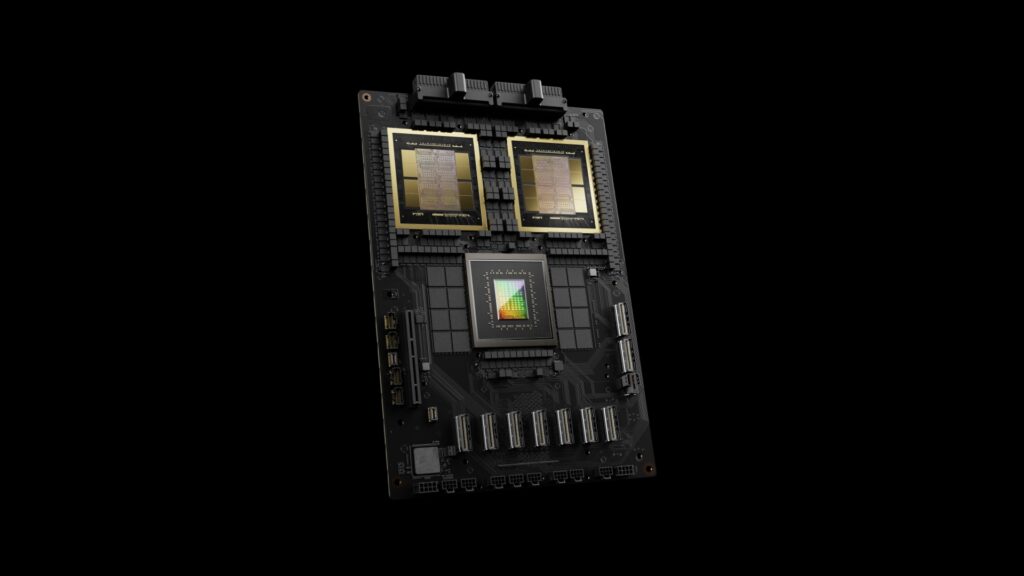

- 208 Billion Transistors: it boasts a staggering number of transistors, enabling it to tackle demanding AI workloads with ease.

- Unmatched AI Processing Speed: Compared to previous Nvidia chips, it offers significantly faster processing for AI models and queries. This will be a major leap forward for applications like deep learning, natural language processing, and computer vision.

- Building on Hopper’s Legacy: Blackwell succeeds the widely acclaimed H100 chip, named after Grace Hopper, a computer science pioneer. While Hopper has been a powerhouse for AI, Nvidia emphasizes the need for even more powerful GPUs. Blackwell is the answer.

Tech Giants Eager to Leverage Blackwell’s Power

Major tech companies like Microsoft, Google (parent company of Alphabet), and Oracle are eagerly awaiting Blackwell’s arrival. These companies have been significant customers for Nvidia’s H100 chips, and with Blackwell, they anticipate significant performance gains and accelerated AI innovation.

Blackwell vs. The Competition

It represents a significant leap forward in AI performance and computational power. Here’s how it stacks up against other AI chips:

- Performance:

- Up to 4 times faster in AI training and 30 times faster in inference compared to the H100 chip.

- Delivers a staggering 20 petaflops of AI compute from a single GPU, surpassing the H100’s 4 petaflops.

- Utilizes a dual-die configuration for efficient operation as a single unified CUDA GPU.

- Transistors and Memory:

- Packs a whopping 208 billion transistors, doubling the H100/H200 count.

- Features 192GB of HBM3e memory with an impressive 8 TB/s bandwidth.

- Die Configuration:

- Not a traditional single GPU; it consists of two full-reticle size chips functioning as one unified unit.

- Each die has four HBM3e stacks connected via a high-bandwidth interface.

- Utilizes TSMC’s 4NP process node for improved performance.

- Consumer-Class Potential: While primarily designed for data centers, consumer-class Blackwell GPUs might be a possibility in the future.

- Industry Endorsements: Leading tech CEOs like Sundar Pichai (Google) and Andy Jassy (Amazon) have highlighted their excitement for this and its potential impact on their AI initiatives.

What CEOs Are Saying About Blackwell

Industry leaders are raving about the potential of Nvidia’s groundbreaking Blackwell AI chip:

- Sundar Pichai (Google): Emphasizes the importance of infrastructure for AI platforms and Google’s excitement to leverage Blackwell across its products and services, including DeepMind.

- Andy Jassy (Amazon): Highlights the long-standing collaboration between AWS and Nvidia, and how Blackwell will excel on the AWS platform, fueled by Project Ceiba.

- Michael Dell (Dell Technologies): Sees Blackwell as a key driver for innovation, enabling Dell to deliver next-generation AI solutions to customers.

- Demis Hassabis (DeepMind): Views Blackwell’s capabilities as critical for scientific advancements and problem-solving.

- Mark Zuckerberg (Meta): Expresses enthusiasm for using Blackwell to train open-source models and build the next generation of Meta AI products.

- Satya Nadella (Microsoft): Commits to offering customers the most advanced AI infrastructure through global deployment of Blackwell in Microsoft data centers.

- Sam Altman (OpenAI): Believes Blackwell’s performance will accelerate development of leading-edge AI models.

- Larry Ellison (Oracle): Highlights the importance of Blackwell’s power for uncovering actionable insights through AI, machine learning, and data analytics.

- Elon Musk (Tesla & xAI): Recognizes Nvidia hardware as the current leader in AI, implicitly endorsing Blackwell’s potential.

Stock Surge:

- Despite a flat performance on the day of Blackwell’s announcement, NVIDIA’s stock has soared more than 83% year-to-date and an impressive 241% over the last 12 months.

- Investors recognize NVIDIA’s pivotal role in shaping the AI landscape.

Blackwell: A Catalyst for AI Innovation

Blackwell is a testament to human ingenuity and technological advancement. This powerful AI chip is poised to redefine AI capabilities and propel us into a new era of innovation across various industries. Stay tuned for its official release and witness how tech giants leverage its power to transform our world.

NVIDIA DRIVE Thor: The Superhero of Centralized Computer for Autonomous Vehicles

NVIDIA stock forecast: NVIDIA’s Unprecedented Climb to AI Dominance: By the Numbers